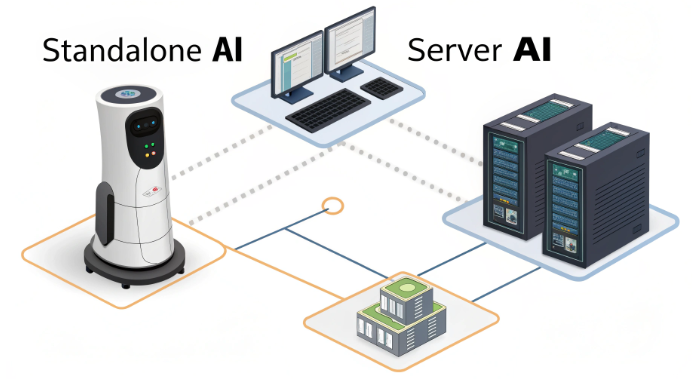

The Differences Between Standalone AI and Server AI

In the rapidly evolving landscape of artificial intelligence, understanding the architectural differences between independent AI and server AI systems has become crucial for tech professionals. From Hong Kong’s unique position as a major server hosting hub, we’re witnessing a growing demand for both deployment models. This deep dive explores the technical nuances, performance metrics, and strategic considerations for AI system architectures.

Technical Architecture: Breaking Down the Core Differences

The fundamental architectural distinction between independent and server AI lies in their computational models and resource distribution patterns. Independent AI systems, often referred to as edge AI or standalone AI, operate within confined local environments, while server-based AI leverages distributed computing resources across data centers.

- Independent AI Architecture:

- Self-contained processing units

- Limited but dedicated computational resources

- Offline operation capability

- Direct hardware integration

- Server AI Architecture:

- Distributed computing framework

- Scalable resource allocation

- Network-dependent operations

- Virtual resource management

Performance Metrics and Resource Utilization

When evaluating AI deployment options, performance metrics serve as critical decision factors. Our analysis from Hong Kong’s hosting infrastructure reveals distinct patterns in resource utilization and operational efficiency.

- Computational Efficiency:

- Independent AI:

– 15-20ms local processing latency

– Limited by hardware specifications

– Consistent performance regardless of network conditions - Server AI:

– Variable latency (30-100ms depending on network)

– Scalable computing power

– Network dependency impacts performance

- Independent AI:

- Resource Allocation:

- Independent AI:

– Fixed resource boundaries

– Predictable performance ceiling

– Optimal for specific-task optimization - Server AI:

– Dynamic resource scaling

– Flexible performance limits

– Suitable for varied workload patterns

- Independent AI:

Deployment Scenarios and Use Cases

The choice between independent and server AI deployments often depends on specific use case requirements. Hong Kong’s position as a technological hub offers unique insights into various deployment scenarios.

- Independent AI Optimal Scenarios:

- Real-time processing requirements

- Privacy-sensitive applications

- Edge computing implementations

- Offline operation requirements

- Server AI Preferred Contexts:

- Large-scale data processing

- Multi-tenant applications

- Resource-intensive operations

- Collaborative AI systems

Infrastructure Requirements and Scaling Considerations

Infrastructure planning for AI deployment requires careful consideration of scaling patterns and resource management. Hong Kong’s advanced hosting facilities demonstrate the importance of robust infrastructure design.

- Independent AI Infrastructure:

- Hardware-dependent scaling

- Direct cooling requirements

- Physical security considerations

- Limited by local power constraints

- Server AI Infrastructure:

- Virtual resource allocation

- Distributed cooling systems

- Network redundancy requirements

- Power distribution flexibility

Cost Analysis and ROI Considerations

Understanding the financial implications of AI deployment choices is crucial for technical decision-makers. Our analysis provides a comprehensive cost breakdown based on Hong Kong hosting market data.

- Capital Expenditure:

- Independent AI:

– Higher initial hardware costs

– Predictable maintenance expenses

– Limited upgrade paths - Server AI:

– Lower upfront investment

– Flexible scaling costs

– Subscription-based pricing models

- Independent AI:

- Operational Expenses:

- Independent AI:

– Fixed power consumption

– Regular maintenance costs

– Limited redundancy expenses - Server AI:

– Variable usage costs

– Network bandwidth expenses

– Managed service fees

- Independent AI:

Security and Compliance Considerations

In Hong Kong’s strictly regulated technology environment, security and compliance form crucial aspects of AI deployment decisions. Each model presents distinct security challenges and advantages.

- Independent AI Security Features:

- Air-gapped operation capability

- Physical access control

- Data localization guarantee

- Autonomous security protocols

- Server AI Security Measures:

- Distributed security layers

- Real-time threat monitoring

- Automated backup systems

- Compliance certification management

Future Trends and Evolution

The AI deployment landscape continues to evolve, with Hong Kong’s hosting infrastructure adapting to emerging technologies and methodologies.

- Emerging Technologies:

- Hybrid AI deployments

- Edge-cloud integration

- Quantum computing preparation

- 5G network optimization

- Market Trends:

- Increased edge computing adoption

- Growing demand for AI-ready hosting

- Rising importance of green computing

- Enhanced security protocols

Conclusion

The choice between independent AI and server AI deployments requires careful consideration of technical requirements, operational constraints, and business objectives. Hong Kong’s position as a leading hosting hub offers unique insights into both deployment models, emphasizing the importance of matching AI architecture with specific use cases and performance requirements. As technology continues to evolve, understanding these fundamental differences becomes increasingly crucial for successful AI implementation.