Linux: Identify EPYC Faulty Cores & Isolate CCDs

Hong Kong hosting and colocation servers rely heavily on AMD EPYC processors for their exceptional multi-core performance and reliability. However, even these powerhouses aren’t immune to core-level anomalies. A faulty Core Complex Die (CCD) can trigger unpredictable system behavior, from performance throttling to critical service crashes. In this guide, we’ll dive into Linux-based methodologies to identify EPYC faulty cores and execute precise CCD isolation commands—essential skills for maintaining peak server uptime in mission-critical environments.

Understanding EPYC Architecture: CCDs Explained

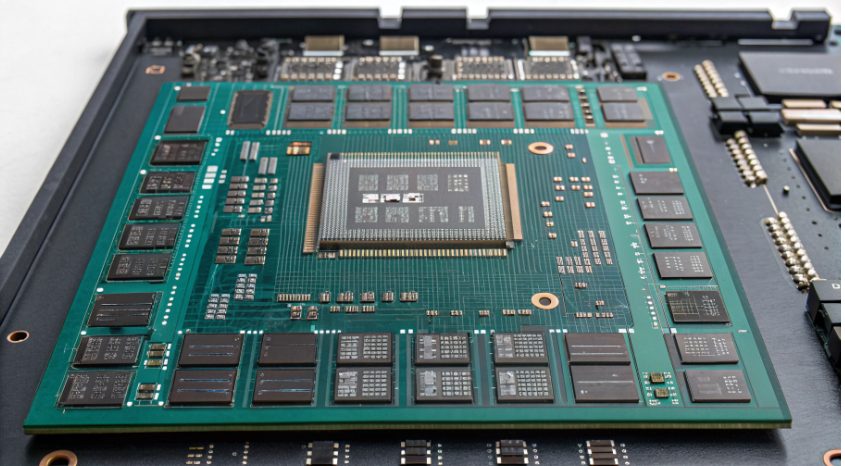

Before diving into troubleshooting, let’s demystify the EPYC processor’s architecture, focusing on the Core Complex Dies (CCDs) that are central to this discussion.

- What is a CCD? A Core Complex Die is a fundamental building block of EPYC processors, containing multiple CPU cores, L3 cache, and memory controllers. Modern EPYC CPUs can house up to 12 CCDs, each operating as a semi-autonomous processing unit.

- Role in Server Performance CCDs distribute workloads across cores, enabling parallel processing critical for high-traffic Hong Kong hosting environments. Proper CCD function ensures balanced resource utilization and consistent performance.

- Root Causes of CCD Anomalies Faulty cores often stem from manufacturing defects, thermal stress, voltage irregularities, or age-related degradation. In dense colocation setups, heat buildup exacerbates these issues.

- Impact on Hong Kong Servers For latency-sensitive applications hosted in Hong Kong, even a single faulty core within a CCD can cause request timeouts, data corruption risks, and increased error rates in logs—costly for business-critical services.

Linux Tools to Detect EPYC Faulty Cores

Linux offers a robust toolkit for low-level hardware diagnostics. Here’s how to leverage these tools to pinpoint problematic EPYC cores.

Hardware Monitoring with ipmitool

Intelligent Platform Management Interface (IPMI) provides direct access to hardware health metrics. For EPYC systems:

- Install ipmitool:

sudo apt install ipmitool(Debian/Ubuntu) orsudo yum install ipmitool(RHEL/CentOS). - Check CPU health logs:

ipmitool sel list | grep -i "cpu\|core"—look for entries like “Core Uncorrectable Error”. - Monitor real-time sensor data:

ipmitool sensor | grep -i "temp\|voltage"to identify thermal or power anomalies.

System Log Analysis

Linux kernel logs are treasure troves for debugging hardware issues. Key commands:

- Check dmesg for recent errors:

dmesg | grep -i "mce\|cpu\|core\|error". Look for Machine Check Exception (MCE) entries. - Review persistent logs:

sudo grep -i "epyc\|core" /var/log/messages /var/log/syslogfor recurring fault patterns. - Interpret MCE codes: Use

mcelog(install withsudo apt install mcelog) to decode raw MCE data into actionable insights:sudo mcelog --ascii.

AMD-Specific Diagnostics with amd-smi

AMD’s System Management Interface (amd-smi) offers EPYC-specific monitoring:

- Download amd-smi from AMD’s official repository or GitHub.

- List CCD status:

sudo amd-smi --show-ccd-info—note any CCDs marked “Degraded” or “Faulty”. - Check core utilization per CCD:

sudo amd-smi --show-core-utilizationto spot abnormal load patterns.

Isolating Faulty CCDs: Step-by-Step Commands

Once a problematic CCD is identified, isolating it prevents the kernel from scheduling tasks on its cores, mitigating risks without full server downtime.

Why Isolate Faulty CCDs?

Isolation quarantines defective hardware, preventing system instability while allowing remaining CCDs to operate normally. This is critical for Hong Kong colocation servers where downtime equals revenue loss.

Isolation Principles

Linux kernel allows disabling individual cores via sysfs, a virtual filesystem exposing kernel and hardware attributes. Disabling all cores within a CCD effectively isolates the faulty die.

Execution Steps

- Map Cores to CCDs: Identify which cores belong to the faulty CCD using

lscpu | grep -i "core\|socket"or AMD’s documentation for your specific EPYC model. - Verify Core IDs: Cross-reference with

cat /proc/cpuinfo | grep -E "processor|core id"to list active cores and their IDs. - Disable Cores: For each core in the faulty CCD (e.g., cores 8-15), run:

echo 0 | sudo tee /sys/devices/system/cpu/cpuX/online(replace X with core ID). - Confirm Isolation: Check disabled cores with

lscpu | grep "On-line CPU(s):"—disabled cores will be excluded from the list. - Persist Changes: To retain isolation after reboot, add core disable commands to

/etc/rc.localor create a systemd service.

Critical Considerations

- Data Backup: Always back up critical data before modifying CPU configurations—unexpected behavior during isolation is rare but possible.

- Business Continuity: Schedule isolation during maintenance windows for Hong Kong hosting servers to minimize user impact.

- Post-Isolation Testing: Run stress tests with

stress-ngorsysbenchto validate system stability post-isolation.

Optimizing EPYC Stability for Hong Kong Servers

Proactive measures reduce the likelihood of CCD failures and streamline troubleshooting.

- Automated Inspection Scripts: Deploy cron jobs to run

ipmitool,mcelog, andamd-smichecks daily. Example:0 3 * * * /path/to/epyc-health-check.sh >> /var/log/epyc-monitor.log 2>&1. - Load Balancing Tuning: Use tools like

tasksetornumactlto distribute workloads evenly across healthy CCDs, preventing thermal hotspots. - Redundancy Planning: For high-availability Hong Kong colocation setups, configure failover clusters where secondary nodes automatically take over if primary CCDs fail.

- Firmware Updates: Regularly update EPYC microcode via

apt upgradeor vendor-specific tools—manufacturers often release fixes for core-level issues.

Conclusion

Maintaining EPYC-powered Hong Kong hosting and colocation servers demands mastery of Linux-based diagnostics and CCD isolation techniques. By leveraging tools like ipmitool, dmesg, and amd-smi, you can accurately identify faulty cores, then use sysfs commands to isolate problematic CCDs—ensuring system stability without sacrificing uptime. Remember, proactive monitoring and regular maintenance are your best defenses against hardware anomalies. Implement the workflows outlined here to keep your EPYC servers running at peak performance, even when individual components falter.

FAQ

- Will isolating a CCD reduce server performance? Slightly, as you lose some cores, but the alternative—unstable operation—is far costlier. Most workloads adapt to the remaining CCDs with minimal impact.

- Can a disabled CCD be re-enabled later? Yes. Run

echo 1 | sudo tee /sys/devices/system/cpu/cpuX/onlinefor each core in the CCD, but only after verifying the root cause of the fault is resolved. - Are there alternative tools for EPYC core detection? Yes, including

hwmonfor sensor data,perffor performance tracing, and vendor-specific tools like AMD EPYC System Management Utility. - Does this isolation method work for non-EPYC processors? The core disable mechanism via sysfs is universal, but CCD-specific mapping and diagnostics tools vary by CPU brand.