Configuring CPUs & RAM for Multi-GPU Servers

In high-performance computing landscapes, multi-GPU servers serve as the backbone for computationally intensive tasks—from deep learning frameworks to complex scientific simulations. Yet, their potential remains untapped without deliberate coordination between CPUs, RAM, and GPU arrays. This guide dissects the technical synergies required to balance processing power, data throughput, and system stability, with a focus on environments like Hong Kong hosting setups that demand both performance and reliability.

Understanding the Triad: CPU, RAM, and GPU Interdependencies

Multi-GPU deployments thrive on a delicate equilibrium where CPUs manage task orchestration, RAM facilitates data movement, and GPUs execute parallel computations. Ignoring this trinity leads to bottlenecks: a powerful GPU array starved of data due to insufficient memory bandwidth, or a CPU unable to schedule tasks efficiently across multiple accelerators. In high-density Hong Kong colocation environments, these inefficiencies compound due to thermal constraints and hardware compatibility requirements.

CPU Selection: Beyond Core Counts

The first myth to dispel: more CPU cores always mean better performance. While parallel task scheduling demands core density, the relationship with GPU count is pivotal. A practical starting point: maintain a core-to-GPU ratio between 2:1 and 4:1. For four GPU instances, 16 to 32 cores provide optimal task distribution without overloading the scheduler.

- Clock Speed vs. Core Density: Low-latency tasks like real-time inference favor higher base frequencies (2.0GHz+), ensuring rapid PCIe data handling. In contrast, distributed training frameworks benefit from more cores with slightly lower frequencies, balancing inter-GPU communication overhead.

- Caching Strategies: L3 cache sizes exceeding 32MB reduce repeated data fetching from RAM, critical for workflows where CPUs preprocess data before GPU ingestion—think image normalization in deep learning pipelines.

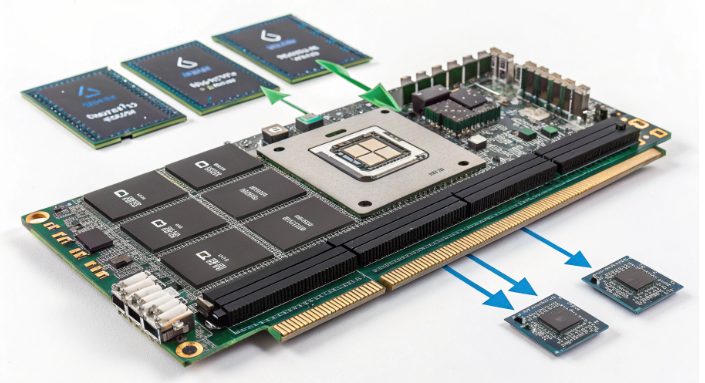

- Expansion Ready: Prioritize CPUs with PCIe 4.0 or higher support, ensuring each GPU gets dedicated 16x lanes. Total PCIe lanes should exceed 64 for configurations with four or more GPUs to prevent bus contention.

RAM Configuration: The Data Highway

Memory acts as the bridge between CPU-hosted control logic and GPU-accelerated computations. Insufficient capacity leads to disk swapping, a performance killer, while inadequate bandwidth chokes data transfer rates. The baseline rule: allocate RAM at 1.5x the total GPU VRAM. For eight GPUs each with 40GB VRAM, 480GB becomes the minimum, with 512GB or more ideal for buffer-heavy workloads.

- Frequency and Channels: DDR4-3200+ or DDR5-4800+ modules ensure low-latency data movement. In setups with four or more GPUs, enable multi-channel modes (eight channels where possible) to parallelize memory access, matching the bandwidth demands of high-throughput GPU clusters.

- Error Resilience: ECC memory is non-negotiable for long-running tasks like AI training, where undetected bit errors can corrupt model weights. Pair this with thermal design—choosing modules with robust heat spreaders to maintain stability in tightly packed server racks.

- Population Strategies: Follow motherboard slot symmetry to enable memory interleaving, which distributes access across controller channels. Avoid partial channel utilization, as a single populated DIMM in a dual-channel setup halves effective bandwidth.

Scenario-Specific Tuning

Workload characteristics dictate final configurations. Let’s explore common use cases:

Deep Learning Training

Here, data preprocessing (image augmentation, batch normalization) runs on CPU while GPUs handle tensor operations. A 24-core CPU with 48 threads balances preprocessing parallelism with GPU task scheduling. 512GB of DDR4-3600 in eight channels ensures smooth data feeding to four or eight GPUs, preventing pipeline stalls during mini-batch iterations.

Scientific Computing

Applications like molecular dynamics simulations require tight CPU-GPU synchronization. Higher base frequencies (2.8GHz+) reduce latency in inter-processor communication, while 256GB of high-frequency RAM minimizes wait times for large dataset chunks moving between host and device memory.

High-End Graphics Rendering

Massive asset loading demands cavernous memory spaces—1TB or more—to hold scene data in RAM, eliminating disk I/O delays. A 64-core CPU manages thousands of simultaneous rendering tasks, distributing jobs across GPUs while maintaining real-time preview responsiveness.

Hong Kong Hosting Considerations

Regional data centers pose unique challenges. High ambient temperatures and space constraints require hardware certified for extended operating ranges (0–85°C). Prioritize motherboards and components on local compatibility lists to avoid firmware conflicts common in multi-vendor setups.

- Cost vs. Performance: Entry-level setups with two GPUs might opt for 16-core CPUs and 128GB RAM, balancing budget with research-grade performance. Enterprise clusters, however, justify 64-core CPUs and terabyte-scale RAM to maximize throughput in 24/7 distributed training.

- Future-Proofing: Reserve 25% of DIMM slots and PCIe lanes for upgrades. Hot-swappable memory architectures allow seamless capacity increases without downtime, critical for businesses scaling GPU fleets incrementally.

Common Pitfalls and Mitigation

Even experienced engineers fall into traps:

- Core Overprovisioning: Beyond a 4:1 core-GPU ratio, scheduler overhead increases without proportional gains. Benchmark with tools like

nvidia-smiandhtopto identify idle core percentages. - Memory Channel Asymmetry: Single-channel operation cuts bandwidth by half. Use hardware monitoring tools to verify channel utilization and rebalance DIMM placement as needed.

- VRAM-RAM Mismatch: Insufficient RAM forces GPUs to wait for data, visible in low GPU utilization metrics during compute phases. Upgrade memory or optimize data pipelines to reduce intermediate tensor sizes.

Validation and Optimization

Post-deployment, rigorous testing ensures optimal performance:

- Run synthetic benchmarks like

STREAMfor memory bandwidth andGeekbenchfor CPU-GPU coordination. - Monitor thermal thresholds with embedded IPMI tools, ensuring RAM and CPU temperatures stay within manufacturer specs, especially in dense Hong Kong colocation racks.

- Iterate on configurations using A/B testing—compare training times or rendering speeds between slight hardware variations to identify optimal settings.

In conclusion, configuring multi-GPU servers transcends component selection; it’s about creating a symbiotic system where each hardware layer enhances the others. By respecting core-GPU ratios, prioritizing memory bandwidth, and adapting to regional infrastructure demands, engineers can build setups that deliver sustained performance—whether in a Hong Kong hosting facility or a global distributed cluster. The goal? A balanced architecture where no component acts as a bottleneck, letting the GPU array achieve its full computational potential.