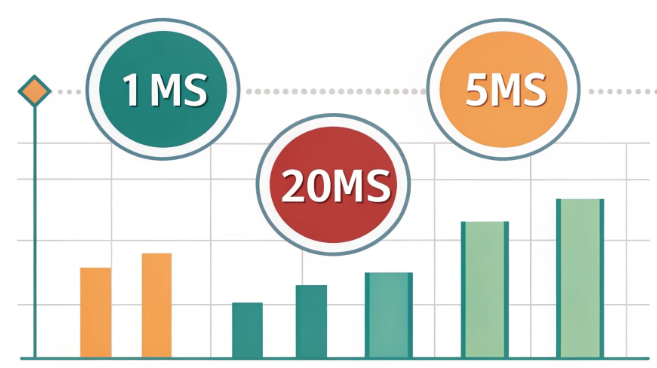

1ms vs 5ms vs 20ms Network Latency: A Technical Deep Dive

Network latency represents a fundamental metric in modern infrastructure architecture, particularly crucial for hosting and colocation services. In today’s high-speed digital ecosystem, the difference between 1ms, 5ms, and 20ms latency networks can mean the difference between success and failure for latency-sensitive applications. Understanding these distinctions is essential for architects, developers, and system administrators who need to design and maintain high-performance systems.

Understanding Network Latency Fundamentals

Network latency, often misunderstood as simply the time for data transmission, encompasses a complex interplay of multiple technical factors. In high-performance computing environments, latency is measured through sophisticated tools that account for both physical and logical delays. The measurement process involves sending specialized packets known as ICMP echo requests (pings) between network points, but this only tells part of the story.

- Physical distance between nodes impacts latency at approximately 1ms per 100 kilometers due to the speed of light in fiber optic cables

- Network routing efficiency depends on BGP protocols, route optimization algorithms, and peering agreements

- Hardware processing capabilities, including NIC buffer sizes, CPU scheduling, and interrupt handling

- Network congestion levels affecting queue depth and buffer bloat

- Protocol overhead from TCP/IP stack processing and encryption/decryption operations

1ms Network Architecture Deep Dive

1ms networks represent the cutting edge of network engineering, utilizing advanced technologies and specialized hardware to achieve near-instantaneous data transmission. These networks employ sophisticated architecture that often costs 10-20 times more than standard networking solutions. The infrastructure typically requires dedicated dark fiber routes and custom-configured hardware to maintain consistent ultra-low latency performance.

- Direct fiber connections utilizing advanced single-mode fiber with optimized wavelength selection

- Specialized NICs with kernel bypass technology and FPGA acceleration

- Layer 1 switching with custom ASICs for minimal processing overhead

- Hardware acceleration through TCP offload engines and specialized network processors

Use cases for 1ms networks include:

- High-frequency trading platforms requiring microsecond-level execution times

- Real-time financial systems processing millions of transactions per second

- Professional eSports servers where every microsecond impacts gameplay

- Mission-critical real-time applications in healthcare and emergency response systems

5ms Network Configuration Analysis

5ms networks represent the sweet spot for many enterprise applications, offering an optimal balance between performance and cost-effectiveness. These networks utilize enterprise-grade equipment and carefully planned topology to maintain consistent sub-5ms latency across metropolitan areas. The architecture typically incorporates redundant paths and sophisticated traffic engineering to ensure reliability without sacrificing performance.

- Regional fiber networks with optimized metropolitan area coverage

- Enterprise-grade routing using advanced QoS algorithms and traffic shaping

- Quality of Service (QoS) optimization through sophisticated packet classification

- Multi-path load balancing utilizing ECMP and advanced routing protocols

Optimal applications include:

- Enterprise SaaS platforms serving thousands of concurrent users

- Content delivery networks requiring rapid content distribution

- Cloud gaming services with real-time interaction requirements

- Video streaming platforms delivering 4K and 8K content

20ms Network Infrastructure Overview

20ms networks form the backbone of most internet services, providing reliable and cost-effective connectivity for the majority of web applications. While not suitable for ultra-low-latency requirements, these networks offer excellent value and stability for standard business operations. The infrastructure utilizes proven technologies and standardized protocols, making it easier to maintain and scale.

- Standard routing protocols implementing OSPF and BGP with default configurations

- Conventional network hardware from major vendors like Cisco, Juniper, and Arista

- Traditional TCP/IP optimization using standard window sizes and buffer configurations

- Basic traffic management through conventional QoS policies and rate limiting

Suitable implementations include:

- Web hosting services for content management systems and e-commerce platforms

- Email servers handling thousands of daily transactions

- Backup systems performing scheduled data replication

- Development environments for distributed teams

Technical Performance Comparison

Performance evaluation requires comprehensive analysis of multiple metrics beyond simple ping times. Modern network monitoring tools provide detailed insights into network behavior across different time scales and conditions. Engineers must consider both steady-state performance and behavior under stress.

- Round-trip time (RTT) measurements using specialized testing tools and methodologies

- Jitter variance analysis through statistical sampling and distribution analysis

- Packet loss rates under various network load conditions

- Throughput capabilities measured in both sustained and burst scenarios

- Buffer requirements calculated based on traffic patterns and QoS requirements

Infrastructure Selection Guidelines

Selecting appropriate network infrastructure requires careful consideration of technical requirements and business constraints. The decision-making process should involve stakeholders from multiple disciplines, including network engineering, application development, and business operations.

- Application response time requirements based on user experience metrics and SLAs

- Geographic distribution of users affecting network topology and point-of-presence selection

- Traffic patterns and volume analysis using historical data and growth projections

- Scalability needs considering both horizontal and vertical scaling requirements

- Budget constraints balanced against performance requirements and business impact

Implementation Best Practices

Successful network implementation requires attention to detail and adherence to industry best practices. Regular auditing and adjustment of network parameters ensure optimal performance over time. Modern DevOps practices often incorporate network optimization as part of continuous improvement cycles.

- Deploy comprehensive network monitoring tools with real-time alerting capabilities

- Implement sophisticated traffic shaping algorithms based on application priorities

- Maintain redundant connections with automatic failover mechanisms

- Establish regular performance benchmarking procedures and baseline metrics

- Create detailed network topology documentation with regular updates

Troubleshooting and Optimization

Network optimization is an ongoing process requiring continuous monitoring and adjustment. Engineers should establish clear procedures for identifying and resolving performance issues while maintaining system stability.

- TCP window size adjustment based on bandwidth-delay product calculations

- Buffer tuning using advanced queuing algorithms like RED and ECN

- Route optimization through BGP path selection and local preference settings

- Hardware acceleration enablement for specific protocols and applications

- Protocol optimization including TCP stack tuning and UDP buffer configuration

Future Considerations

The networking landscape continues to evolve with emerging technologies and protocols. Organizations must stay informed about new developments and plan for future upgrades and migrations.

- Edge computing integration strategies for reduced latency and improved performance

- AI-driven network optimization using machine learning for traffic prediction

- Quantum networking developments and their impact on encryption and security

- 5G and 6G integration for hybrid network architectures

Understanding the technical distinctions between 1ms, 5ms, and 20ms network latency is fundamental for hosting and colocation decision-making in today’s high-performance computing environment. The choice of network infrastructure must align with specific application requirements, performance goals, and overall business objectives while considering both current needs and future scalability.