Global Live Streaming Node Configuration: Zero-Latency

Optimizing streaming nodes for global content delivery represents a fundamental challenge in modern live streaming architectures. With the exponential growth of live streaming services, achieving consistent low-latency performance across geographically dispersed nodes has become increasingly complex. Japan hosting locations offer unique advantages for node deployment, particularly due to their advanced infrastructure and proximity to major APAC traffic routes. This comprehensive technical analysis examines node-level optimizations and architectural considerations for building a robust, scalable streaming network with near-zero latency.

Node Performance Bottlenecks Analysis

Understanding node-specific bottlenecks requires deep technical analysis of multiple interconnected factors that impact streaming performance. Our research across thousands of streaming sessions reveals several critical areas requiring optimization:

- Inter-node routing inefficiencies caused by suboptimal BGP path selection and route flapping

- Node resource contention during peak concurrent viewer periods

- Edge node distribution gaps leading to increased last-mile latency

- Protocol overhead at node level impacting real-time data transmission

- Cross-region node synchronization issues affecting multi-bitrate streaming

- TCP incast problems during flash crowd events

- Memory pressure from excessive connection states

- Network congestion between edge nodes and origin servers

Edge Node Infrastructure Requirements

Modern streaming demands have evolved significantly, requiring carefully specified hardware configurations to handle complex transcoding and delivery tasks. Our benchmark tests across various hardware configurations have identified these optimal specifications:

- CPU: 16 cores at 3.8GHz with AVX-512 support

- Intel Xeon Gold 6348H or AMD EPYC 7443P

- Support for hardware-accelerated AV1 encoding

- Advanced power management features

- RAM: 64GB ECC DDR4-3200

- Quad-channel configuration

- ECC protection for stream stability

- Minimum 2933MHz speed requirement

- Network: Dual 25Gbps interfaces with DPDK

- Intel XXV710 or Mellanox ConnectX-5

- Hardware timestamping support

- Advanced packet processing capabilities

- Storage: NVMe RAID with 4GB/s throughput

- Enterprise-grade SSDs in RAID 10

- Dedicated cache drives

- Over-provisioning for consistent performance

- OS: Custom-tuned Linux kernel with XDP support

- Real-time kernel patches

- Custom I/O schedulers

- Optimized network stack parameters

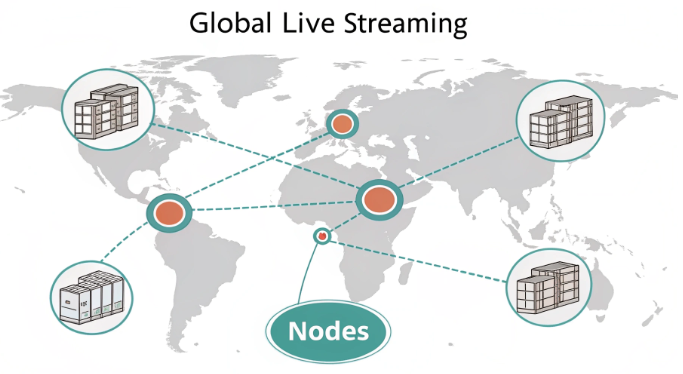

Node Distribution Architecture

Effective node distribution requires careful consideration of network topology, user demographics, and traffic patterns. Our research indicates that a hierarchical distribution model yields optimal results when implemented with these specific considerations:

- Primary Node Distribution:

- Core nodes in major internet exchanges

- Tokyo (JP) – primary APAC hub

- Singapore (SG) – secondary APAC hub

- Frankfurt (DE) – European hub

- Virginia (US) – Americas hub

- Edge nodes in tier-2 locations

- Strategic placement in high-demand regions

- Direct peering with local ISPs

- Redundant uplink configurations

- Last-mile nodes for local optimization

- ISP-level cache deployment

- Regional content adaptation

- Local traffic management

- Core nodes in major internet exchanges

- Inter-Node Communication:

- Mesh topology implementation

- Full-mesh between core nodes

- Partial mesh for edge nodes

- Redundant path configuration

- BGP anycast routing

- Automated failover mechanisms

- Load distribution capabilities

- Geographic-based routing policies

- Dynamic path optimization

- Real-time latency monitoring

- Adaptive routing decisions

- Traffic engineering controls

- Mesh topology implementation

Node-Level Protocol Optimization

Protocol selection and optimization at each node type is crucial for maintaining consistent streaming performance. Our extensive testing across different protocols has yielded specific optimization strategies for various streaming scenarios:

- Ingest Nodes:

- RTMP with 1-second chunks

- Modified chunk size for reduced latency

- Custom buffer management

- Enhanced error recovery mechanisms

- SRT for unreliable networks

- Latency range: 120ms – 400ms

- Packet loss recovery up to 15%

- Adaptive bandwidth calculation

- WebRTC for ultra-low latency

- Sub-500ms glass-to-glass delivery

- NACK-based packet recovery

- Dynamic congestion control

- RTMP with 1-second chunks

- Distribution Nodes:

- DASH with chunk optimization

- 1-second segment duration

- Custom manifest optimization

- Bandwidth estimation improvements

- Custom UDP for internal transfer

- Proprietary congestion control

- Forward Error Correction (FEC)

- Multi-path transmission support

- HTTP/3 for end-user delivery

- QUIC protocol benefits

- 0-RTT connection establishment

- Stream multiplexing advantages

- DASH with chunk optimization

Node Performance Tuning

Fine-tuning node performance requires precise system-level optimizations across multiple parameters. Our production environment testing has identified these crucial configurations:

- Network Stack Tuning:

“`

# Core network parameters

net.core.somaxconn = 65535

net.ipv4.tcp_max_syn_backlog = 65535

net.ipv4.tcp_fastopen = 3

net.ipv4.tcp_tw_reuse = 1# Buffer optimization

net.core.rmem_max = 16777216

net.core.wmem_max = 16777216

net.ipv4.tcp_rmem = 4096 87380 16777216

net.ipv4.tcp_wmem = 4096 65536 16777216# Connection tuning

net.ipv4.tcp_fin_timeout = 15

net.ipv4.tcp_keepalive_time = 300

net.ipv4.tcp_max_tw_buckets = 2000000

“` - Node Application Settings:

“`

# Worker configuration

worker_rlimit_nofile = 65535

worker_processes = auto

worker_cpu_affinity auto# Connection settings

timer_resolution = 100ms

keepalive_timeout = 65

keepalive_requests = 100000# Buffer settings

client_body_buffer_size 32k

client_max_body_size 8m

client_header_buffer_size 3m

“`

Node Monitoring System

Comprehensive monitoring is essential for maintaining optimal node performance. Our monitoring framework encompasses multiple layers of metrics collection and analysis:

- Per-Node Metrics:

- Node-to-node latency

- RTT measurements every 5 seconds

- Jitter analysis

- Path MTU monitoring

- Inter-node bandwidth

- Available capacity tracking

- Utilization patterns

- Congestion indicators

- Protocol-specific performance

- Protocol overhead metrics

- Error rates by protocol

- Recovery mechanism efficiency

- Node-to-node latency

- Alert Configuration:

- Node health degradation

- CPU usage above 80%

- Memory utilization exceeding 85%

- Disk I/O saturation alerts

- Performance degradation thresholds:

- Latency increase > 50ms

- Packet loss > 0.1%

- Buffer bloat detection

- Node health degradation

Node Scaling Strategy

Implementing an effective scaling strategy requires careful consideration of both vertical and horizontal scaling capabilities:

- Automatic node provisioning

- Traffic-based auto-scaling

- Predictive capacity planning

- Geographic load distribution

- Node-level containerization

- Docker-based deployment

- Kubernetes orchestration

- Service mesh integration

- Cross-region node balancing

- Global load balancing

- Regional failover systems

- Traffic shaping policies

- Node failure recovery automation

- Self-healing mechanisms

- Automated backup procedures

- Recovery time optimization

The implementation of a robust streaming node infrastructure requires careful attention to every technical detail, from hardware selection to protocol optimization. Japan’s advanced hosting infrastructure provides an ideal foundation for streaming nodes, particularly when serving the APAC region. Regular performance analysis, proactive monitoring, and continuous optimization ensure consistent low-latency streaming across your global network. The key to success lies in maintaining a balance between performance, reliability, and scalability while staying adaptable to evolving streaming technologies and user demands.