Fix Kubernetes Services Timing Out – Multiple Worker Nodes

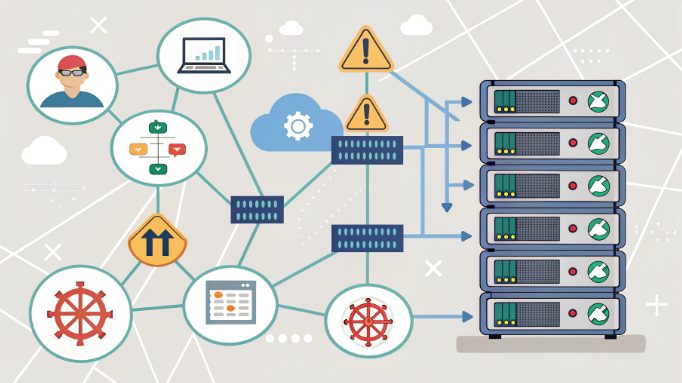

You may notice kubernetes services timing out across worker nodes, particularly in Japan server clusters where network latency can be more pronounced due to geographic distribution. This often happens because of networking issues, kernel SNAT problems, or service discovery failures. In many cases, kubernetes services experience connection drops or slowdowns when a cluster faces DNS issues, misconfigured networking, or resource limits – challenges that are especially common when connecting to Japan server infrastructures across different regions. Review the table below to see the most common root causes seen in Japan server environments and global kubernetes deployments:

Root Cause | Description |

|---|---|

DNS Issues | Problems with DNS resolution can lead to service timeouts in Kubernetes. |

Networking Issues | Network misconfigurations or failures can cause delays and timeouts. |

Resource Allocation | Insufficient resources allocated to pods can lead to performance issues. |

Kubernetes services may also fail during upgrades if a webhook becomes unresponsive, causing connection errors. When you see intermittent time-outs or a node not ready, check for these issues first. Quick troubleshooting helps restore kubernetes services and keeps connection problems from spreading.

Key Takeaways

Kubernetes services can time out due to networking issues, DNS problems, or insufficient resources. Identifying these root causes is crucial for fixing the problem.

Check the health of nodes and pods regularly. Use commands like ‘kubectl get pods’ to monitor their status and ensure they are ready to handle traffic.

Review network policies and firewall rules to ensure they allow necessary traffic. Misconfigurations can block communication between nodes and cause timeouts.

Adjust idle timeouts and port limits to manage connections effectively. This helps prevent service disruptions and improves overall performance.

Use monitoring tools like Prometheus and Grafana to track network metrics. Regular health checks can help you spot issues before they escalate.

Identify Intermittent Time-Out Symptoms

Error Logs in Kubernetes Services

You can spot intermittent time-outs in Kubernetes services by checking error logs and monitoring connection attempts. Many users see API requests timing out when interacting with the Kubernetes API server. You may notice errors in application logs or failed commands. Sometimes, you experience time-outs when accessing applications, which can point to performance issues with cluster components.

Look for these common signs:

API requests timing out

Intermittent time-outs when accessing services

Performance issues with cluster components

When you analyze error logs, you often find that TCP connections fail to establish between Kubelet and pods. You might see that the TCP SYN is sent from Kubelet, but the expected TCP ACK never arrives. This usually means there is a network problem. Sometimes, connections get stuck in SYN-SENT state, which shows that Kubelet cannot handle TCP sessions correctly. If you discover that source ports are reserved by Kubernetes nodeports, this misconfiguration can cause health check failures.

Here is a table of log patterns and error codes that indicate cross-node connectivity problems:

Indicator Type | Description |

|---|---|

Connection failures | Connection attempt was unsuccessful |

Timeouts | Connection attempt took too long |

Unusual syscall sequences | Abnormal behavior in system calls related to networking |

Node Not Ready and Pod Connectivity

You need to pay close attention to node status. If you see node not ready, you cannot schedule pods on that node. This directly affects service availability. The node not ready state means pods cannot accept traffic or perform their intended function. When a node is marked as node not ready, it cannot function properly and cannot schedule new pods. This impacts pod connectivity and service availability. Pods cannot be scheduled on nodes that are not in the ready state. If a node is not ready, it cannot host new pods, which affects overall service availability.

Namespace and Service Discovery Issues

Namespace and service discovery problems often lead to service timeouts. You should check for typos in the Service name or incorrect namespace. DNS issues can also cause trouble. Sometimes, there are no backing pods, or the targetPort is incorrect. Network restrictions may block traffic. Environment variables might not be populated, or load balancing could be set up incorrectly.

Common namespace and service discovery issues include:

Typos in Service name

Incorrect namespace

DNS issues

No backing pods

Incorrect targetPort

Network restrictions

Environment variables not populated

Incorrect load balancing

If you recognize these symptoms, you can quickly narrow down the root cause and restore service availability.

Troubleshooting Kubernetes Services Across Nodes

When you face service timeouts in a kubernetes cluster, you need a clear troubleshooting process. You can resolve most connectivity issues by following these steps. Each step helps you identify the root cause and restore service availability across kubernetes nodes.

Pod and Node Health Checks

Start troubleshooting by checking the health of the pods and nodes. You want to make sure that kubelet and kube-proxy are running on every kubernetes node. If a node is not ready, kubelet cannot schedule pods, and kube-proxy cannot route traffic. You should monitor the application and use probes to confirm that your application is running and accepting traffic.

Node Status Checks: Watch for OutOfDisk, Ready, MemoryPressure, PIDPressure, DiskPressure, and NetworkUnavailable conditions.

Compare desired and current pods using kube_deployment_spec_replicas and kube_deployment_status_replicas.

Track available and unavailable pods to spot readiness probe failures.

Use liveness probes to check if the application is running.

Use readiness probes to verify if the application can accept traffic.

Use startup probes to confirm containers have initialized.

You can use these kubernetes commands to troubleshoot node and pod health:

Command | Purpose | |||||||||||||||||||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

kubectl get pods | Shows pod STATUS and RESTARTS, indicating recurring failures. | |||||||||||||||||||||||||||||||||||

kubectl describe pod

|

You should check the health of the pods and check the state of the pod before moving to the next troubleshooting steps. Network Policy and Firewall ReviewNetwork policies and firewall rules often cause service timeouts between kubernetes nodes. You need to review your network configuration and security settings. Misconfigured route tables, security lists, or gateways can block traffic between kubelet and kube-proxy. If you use both an internet gateway and a service gateway for the same target, traffic may be misrouted.

Follow these troubleshooting steps to review firewall rules:

You should also confirm that kubelet and kube-proxy can communicate across all kubernetes nodes. This helps you maintain network connectivity and avoid networking issues. Service and Endpoint ConfigurationService and endpoint misconfigurations can lead to timeouts and connectivity issues. You need to check the service associated with deployment and make sure endpoints are correct. If kubelet or kube-proxy cannot find the right endpoints, your application will not work as expected.

Use these kubernetes commands to troubleshoot service and endpoint configuration:

|