AI-Driven Anomaly Detection Deployment on US Servers

The Imperative of AI Anomaly Detection in Digital Ecosystems

As cyber threats evolve—from advanced persistent threats to sophisticated data breaches—traditional rule-based systems struggle to keep pace. The core challenge lies in their inability to adapt to dynamic patterns, leaving organizations vulnerable to zero-day exploits. AI-driven anomaly detection emerges as a critical solution, leveraging machine learning to identify deviations from normal behavior in real time. This approach isn’t just about reacting to threats; it’s about predicting them by analyzing subtle patterns that evade conventional systems. For organizations operating in the US, deploying such systems on local servers offers unique advantages in latency, compliance, and computing power optimization, making it a strategic choice for tech-driven security strategies.

Technical Foundations: AI Anomaly Detection Principles and Architecture

At the core of AI-driven anomaly detection lie two primary learning paradigms:

- Supervised Learning: Requires labeled data to train models to recognize known anomalies, effective for the classification of previously identified threats.

- Unsupervised Learning: Excels in detecting novel anomalies by learning normal behavior patterns without prior labeling, ideal for zero-day threat identification.

Key algorithmic models driving these systems include:

- Isolation Forest: A tree-based method that isolates outliers by leveraging the fact that anomalies are shorter to reach in a decision tree structure.

- Autoencoders: Neural networks trained to reconstruct normal data, flagging deviations that cannot be accurately reproduced as anomalies.

- Temporal Models (e.g., LSTM): Essential for time-series data, these models learn sequential patterns to detect anomalies in streaming data flows.

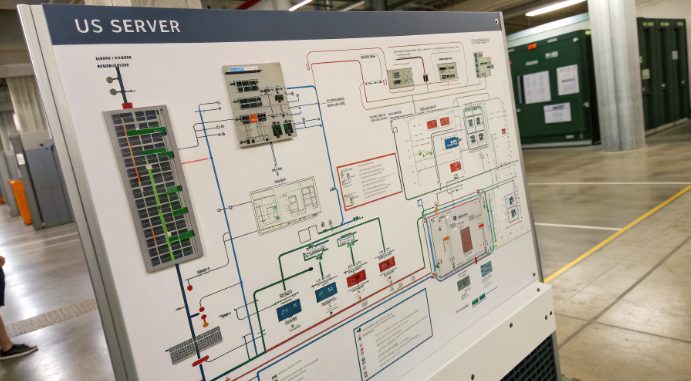

The architectural blueprint of an AI anomaly detection system typically consists of three layers:

- Data Ingestion Layer: Responsible for collecting real-time logs, network traffic, and user behavior data, with built-in mechanisms for data normalization.

- Model Training Layer: A compute-intensive layer where models are trained, validated, and optimized, often leveraging distributed frameworks for scalability.

- Detection Engine: The real-time component that applies trained models to incoming data, generating alerts based on predefined or dynamically adjusted thresholds.

US Server Advantages: Hardware, Network, and Compliance Edge

Deploying AI anomaly detection systems on US-based servers offers distinct technical and operational benefits:

Hardware and Network Infrastructure

- High-availability data centers equipped with redundant power, cooling, and network fabrics, ensuring minimal downtime for critical AI workloads.

- Proximity to major internet exchange points enables low-latency data processing, crucial for real-time anomaly detection in time-sensitive applications.

- Robust GPU and CPU configurations optimized for parallel processing, accelerating model inference and reducing detection latency.

Compliance and Regulatory Alignment

US-based infrastructure simplifies compliance with regional regulations:

- Adherence to data localization requirements for industries like finance (GLBA) and healthcare (HIPAA), minimizing legal risks.

- Integration with local security frameworks that align with US cybersecurity standards, enhancing overall system trustworthiness.

- Streamlined audits and compliance reporting due to geographical alignment with regulatory bodies.

Deployment Workflow: From Planning to Production

Pre-Deployment Planning

Effective deployment begins with thorough requirements analysis:

- Identify use cases: server security, user behavior analytics, IoT device monitoring, or network traffic analysis.

- Define success metrics: false positive rate, detection latency, and model accuracy benchmarks.

- Server specification: CPU/GPU selection based on model complexity, storage requirements (SSD/NVMe for low-latency data access), and network bandwidth planning.

Technical Implementation Stages

- Environment Provisioning

- OS optimization: Tailoring Linux distributions or Windows Server for AI workloads, including kernel parameter tuning.

- Containerization: Deploying services via Docker with Kubernetes orchestration for scalability and service resilience.

- Data Preprocessing Pipeline

- Data ingestion: Implementing real-time streaming frameworks to collect logs and metrics.

- Feature engineering: Extracting meaningful attributes from raw data to form a robust feature set for modeling.

- Data desensitization: Ensuring compliance with data privacy regulations through anonymization techniques.

- Model Training and Optimization

- Distributed training: Leveraging parallel computing to accelerate model convergence on large datasets.

- Hyperparameter tuning: Optimizing model configurations to balance detection accuracy and computational efficiency.

- Anomaly thresholding: Implementing dynamic thresholding based on business context and risk tolerance.

- Deployment and Monitoring

- A/B testing: Comparing model performance across different US server regions to identify optimal deployment zones.

- Real-time monitoring: Implementing observability tools to track model performance, resource utilization, and detection efficacy.

- Alerting integration: Configuring multi-channel alerting systems for timely anomaly notification.

Overcoming Deployment Challenges

Implementing AI anomaly detection on US servers isn’t without hurdles. Here are common challenges and their mitigations:

- Data Sparsity

Limited training data can hinder model accuracy. Remedy: employ transfer learning techniques, leveraging pre-trained models on generic datasets and fine-tuning them with domain-specific data.

- Computational Cost

AI workloads can be resource-intensive. Strategy: optimize resource allocation using cloud-based scalable infrastructure, leveraging spot instances for non-critical training tasks.

- Cross-Region Consistency

Maintaining detection uniformity across distributed servers. Approach: implement standardized data pipelines and model versions, coupled with consistent monitoring across all regions.

Future Directions: AI and US Server Integration

The convergence of AI anomaly detection and US server infrastructure is evolving toward:

- Edge-AI Integration: With the expansion of 5G in the US, integrating edge computing nodes for low-latency anomaly detection at the network perimeter.

- Federated Learning: Enabling collaborative model training across distributed US-based servers without data centralization, enhancing privacy and compliance.

- Quantum-Ready Architectures: Preparing AI systems on US servers for future quantum computing advancements, ensuring algorithmic resilience.

Conclusion: Translating Technical Deployment into Business Value

Deploying AI-driven anomaly detection systems on US servers is not just a technical decision but a strategic one. It empowers organizations to leverage local infrastructure for real-time threat detection, compliance alignment, and operational efficiency. As cyber threats continue to evolve, the ability to deploy, optimize, and scale AI models on robust US-based infrastructure will be critical for maintaining security postures. By focusing on architectural flexibility, data integrity, and continuous model refinement, tech professionals can transform anomaly detection from a reactive measure to a proactive defense mechanism, driving tangible business value through enhanced security and operational resilience.