How to Employ Japan Servers for Cross-Border Live Streaming

Introduction: The Technical Imperative of Japanese Servers

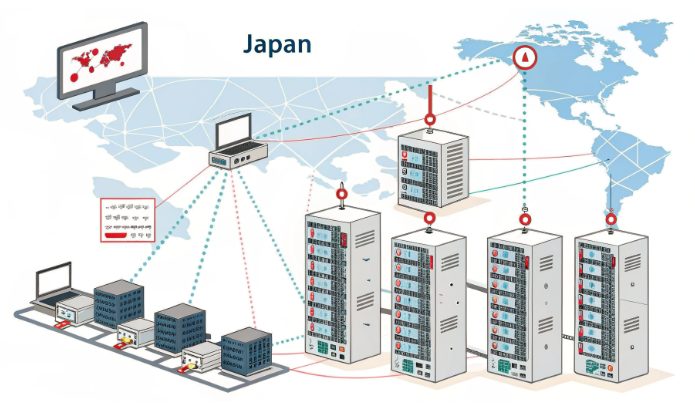

For tech professionals orchestrating cross-border live streams, Japanese servers offer unique network advantages. Their geographic proximity to key Asian markets reduces latency, while compliance with regional data frameworks ensures legal integrity. This deep-dive explores how to architect, deploy, and optimize server setups tailored for high-concurrency streaming across borders, focusing on technical principles over specific configurations.

Server Infrastructure Strategy: Aligning Architecture with Scale

Effective server selection begins with understanding traffic patterns and operational requirements. Here’s a framework for evaluating infrastructure types:

Cloud Hosting for Agile Operations

- Ideal for startups needing rapid deployment and resource elasticity

- Containerization support enables seamless scaling of streaming microservices

- Pay-as-you-go models reduce upfront investment for testing new markets

Colocation Servers for Enterprise-Grade Performance

- Dedicated hardware for brands handling large concurrent audiences or daily streaming

- Redundant systems minimize downtime during peak broadcast windows

- Customizable configurations to meet specific performance benchmarks

Network and System Level Optimizations

Strategic configuration is essential to unlock a server’s full streaming potential.

Network Stack Enhancement

- Implement edge caching via regional CDN nodes to reduce origin server load

- Deploy SSL certificates for end-to-end encryption, improving security and SEO

- Optimize TCP parameters for high-concurrent connections and low latency

Server OS and Software Tuning

- Lightweight Linux distributions offer optimal performance for streaming workloads

- Web server configurations to enhance concurrent connection handling

- Rate limiting mechanisms to prevent bandwidth exhaustion from traffic spikes

Building the Live Streaming Architecture

Architecting a robust pipeline requires mastering server-side configurations and streaming protocols.

Self-Hosted Streaming Solutions

- Install media servers supporting RTMP/RTSP/HLS protocols for versatile streaming

- Source encoding configurations for optimal video quality-to-bandwidth ratio

- Reverse proxy setups with WebSocket support for low-latency chat integration

Third-Party Platform Integration

- Secure tunneling for pushing streams to regional platforms while maintaining control

- Load balancing between endpoints to avoid platform-specific downtime

- Server-side transcoding for adaptive bitrate streams across network conditions

Advanced Streaming Engineering

Minimizing latency and ensuring reliability are critical for mission-critical broadcasts.

Low-Latency Streaming Techniques

- WebRTC-based streaming for sub-100ms latency in interactive experiences

- UDP-based protocols to reduce handshake latency for real-time interactions

- Edge server deployments to shorten last-mile delivery to global audiences

High Availability Architectures

- Active-active server clusters with automatic failover mechanisms

- Real-time monitoring systems for immediate alerting on performance anomalies

- Warm standby configurations for instantaneous failover scenarios

Regional Compliance and Data Protection

Operating in Japan requires strict adherence to local regulations and content standards.

Legal and Regulatory Considerations

- Server infrastructure compliance with local data protection frameworks

- Data residency policies for user-generated content storage

- Content moderation workflows aligned with regional advertising standards

Security Hardening for Compliance

- Firewall configurations to restrict non-essential incoming connections

- Encryption protocols for storage volumes containing sensitive data

- Regular security audits to meet international compliance standards

Performance Tuning Principles

Continuous optimization maintains streaming quality as audience规模 grows.

Key Performance Indicators (KPIs)

- First Byte Time (FBT) for optimal viewer engagement

- Buffering Rate metrics to prevent viewer drop-off

- Server load thresholds during peak broadcast periods

Optimization Workflows

- A/B testing on encoding parameters for quality-bandwidth balance

- Dynamic bitrate adaptation based on real-time network quality

- Traffic shaping to prioritize streaming traffic on the server

Case Studies: Real-World Architecture Outcomes

Analyzing implementations provides insights into effective server strategies.

- An electronics brand reduced Tokyo latency significantly by deploying a local colocation server with CDN integration

- A fashion retailer mitigated buffering issues through WebRTC-based streaming on Japanese infrastructure

- A brand improved conversion rates by optimizing servers for interactive high-definition streaming with low latency

Frequently Asked Technical Questions

Addressing common challenges in Japanese server deployments.

- What’s the optimal server approach for large concurrent audiences?

Dedicated hardware with redundant systems and traffic shaping capabilities. - How to mitigate latency for global audiences?

Global CDN networks paired with Japanese origin servers for content aggregation. - Can Japanese servers support real-time multilingual translation?

Yes, by integrating server-side translation APIs with low-latency streaming pipelines.

Conclusion: Japanese Servers as a Strategic Technical Foundation

For tech teams aiming to lead in cross-border live streaming, Japanese servers offer network proximity, regulatory compliance, and technical scalability. From initial architecture to ongoing optimization, the right infrastructure enables low-latency experiences, supports large audiences, and ensures legal compliance. Treating server architecture as a strategic asset allows tech professionals to build streaming ecosystems that exceed user expectations in global e-commerce. The future of cross-border live streaming belongs to those who master regional server deployments—starting with the robust foundation of Japanese infrastructure.