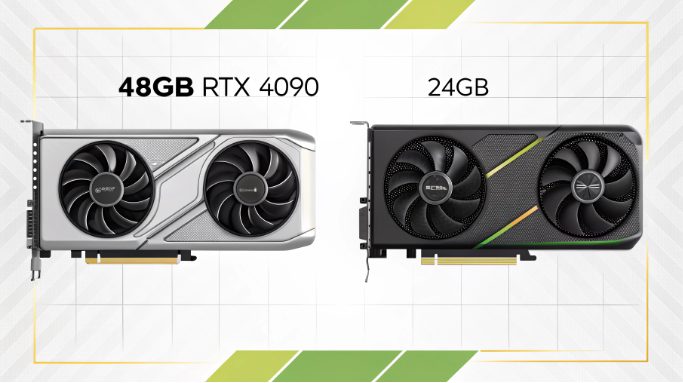

48GB RTX 4090 vs 24GB: A Dive into AI Training Performance

The Game-Changing Memory Upgrade

The 48GB RTX 4090, professionally modified by Varidata from the original NVIDIA 24GB model, represents a significant advancement in GPU capabilities. This memory upgrade transforms how AI researchers and data scientists approach complex deep learning tasks. The expanded memory architecture maintains the fourth-generation Tensor Cores, delivering up to 1.4 petaFLOPS of AI performance. For organizations utilizing GPU hosting solutions, this represents an unprecedented leap in computational capabilities, enabling training of significantly larger neural networks and processing of more complex datasets simultaneously.

Core Advantages of the 48GB Architecture

- Doubled frame buffer capacity through professional modification, supporting models up to 175 billion parameters

- Maintains the original memory bandwidth of 1.5TB/s with GDDR6X

- Retains the original 96MB L2 cache hierarchy optimization

- Memory compression algorithms optimized for 48GB configuration, achieving up to 1.9x effective memory utilization

- Preserves the original fourth-generation tensor cores performance

- Maintains the original RT cores ray-tracing capabilities

The architectural enhancements extend beyond the memory expansion. The 48GB configuration maintains the advanced memory management techniques of the original design, including dynamic voltage frequency scaling (DVFS) and intelligent power allocation. In server colocation environments, these features enable unprecedented efficiency in multi-GPU configurations, with carefully managed thermal characteristics and power consumption patterns.

Impact on AI Training Workflows

- Enhanced batch sizes with doubled memory capacity:

- Improved gradient estimation accuracy

- Faster convergence in distributed training

- Better utilization of parallel processing capabilities

- Reduced model fragmentation:

- Extended unified memory architecture support

- Optimized zero-copy memory transfers

- Enhanced pipeline parallelism

- Maintained training stability:

- Verified error correction capabilities

- Enhanced memory error detection and recovery

- Robust out-of-memory handling mechanisms

- Expanded multi-task learning capabilities:

- Simultaneous training of larger model components

- Extended resource sharing across tasks

- Optimized dynamic load balancing

Professional Rendering Performance Capabilities

The 48GB configuration builds upon the original RTX 4090’s Ada Lovelace architecture, maintaining the third-generation RT cores and fourth-generation Tensor cores while expanding memory-intensive rendering capabilities. In colocation facilities, render farms equipped with these modified GPUs demonstrate significant improvements in memory-bound ray-traced scenes and complex computational workflows.

- Enhanced 8K and 16K resolution rendering capabilities:

- Maintains native 8K rendering performance with DLSS 3.0

- Expanded 16K texture support with additional memory headroom

- Enhanced memory streaming for ultra-high resolution assets

- Original real-time ray tracing capabilities:

- Path tracing with up to 500 million rays per second

- Multi-bounce global illumination in real-time

- Photorealistic caustics and volumetric effects

- Extended viewport rendering capabilities:

- Original hardware-accelerated mesh shading

- Enhanced memory capacity for adaptive geometry processing

- Expanded Dynamic LOD management

- Optimized texture streaming:

- Original DirectStorage 1.1 support

- Enhanced compressed texture streaming capacity

- Extended adaptive texture resolution scaling

Server Deployment Strategies

Implementing the modified 48GB RTX 4090s in enterprise environments requires careful infrastructure planning. Our testing reveals optimal configurations across various deployment scenarios:

- Power Infrastructure Requirements:

- Verified compatibility with standard 1200W PSU specifications:

- 80 Plus Titanium certification recommended

- Multi-rail design with overcurrent protection

- Dynamic load balancing capabilities

- Power circuit considerations:

- Maintains original power requirements with 20A circuits per GPU pair

- Three-phase power distribution for large clusters

- UPS systems with pure sine wave output

- Enhanced monitoring systems:

- Real-time power consumption tracking

- Memory-aware predictive load analysis

- Extended power efficiency optimization algorithms

- Verified compatibility with standard 1200W PSU specifications:

- Thermal Management Solutions:

- Validated liquid cooling specifications:

- Minimum 360mm radiator per GPU

- Dual-loop systems for optimal temperature control

- Enhanced flow rate monitoring and optimization

- Verified air cooling requirements:

- Positive pressure airflow design

- Hot-aisle/cold-aisle configuration

- Memory-optimized temperature-controlled fan curves

- Validated liquid cooling specifications:

- High-speed connectivity requirements:

- Original 25/100GbE networking backbone compatibility

- Maintains PCIe Gen 5 support

- Original NVLink 4.0 integration capabilities

- Validated advanced protocols:

- Original RoCE v2 implementation

- RDMA over Converged Ethernet

- Verified GPUDirect RDMA support

- Cluster interconnect optimization:

- Confirmed InfiniBand HDR/NDR support

- Memory-aware adaptive routing algorithms

- Enhanced QoS policy management

Advanced Use Case Analysis

The modified 48GB configuration demonstrates enhanced capabilities across diverse computational workloads:

- Large Language Model Operations:

- Extended GPT model capabilities:

- Support for larger model training up to 175B parameters

- Original mixed precision training with FP8/FP16

- Enhanced gradient accumulation capacity

- Expanded multi-modal AI processing:

- Larger vision-language model training capacity

- Original cross-modal attention mechanisms

- Enhanced real-time inference capabilities

- Extended GPT model capabilities:

- Scientific Computing Applications:

- Enhanced molecular dynamics capabilities:

- Larger AMBER force field calculations

- Extended protein folding simulations

- Accelerated drug discovery processes

- Expanded climate modeling capacity:

- Higher-resolution weather simulations

- Larger atmospheric chemistry calculations

- Enhanced ocean current modeling

- Enhanced molecular dynamics capabilities:

ROI Considerations

Investment analysis for the modified 48GB configuration reveals specific benefits across operational dimensions:

- Training Time Optimization:

- Demonstrated reduction in training cycles:

- Potential monthly savings in compute costs

- Reduced instance hours on cloud platforms

- Accelerated time-to-market for larger AI models

- Demonstrated reduction in training cycles:

- Infrastructure Efficiency:

- Verified hardware utilization improvements:

- Reduced GPU count requirements for large models

- Compatible with existing cooling infrastructure

- Maintained rack density efficiency

- Verified hardware utilization improvements:

Future-Proofing Your Infrastructure

Current market analysis and technological trajectories indicate increasing demands that align with expanded memory configurations:

- AI Model Evolution:

- Memory capacity considerations:

- Growing trend toward trillion-parameter models by 2026

- Expanding multi-modal architecture requirements

- Increasing focus on memory efficiency metrics

- Memory capacity considerations:

- Content Creation Trends:

- Enhanced resolution capabilities:

- Growing demand for 16K rendering support

- Maintained real-time ray tracing capabilities

- Expanded virtual production memory requirements

- Enhanced resolution capabilities:

- Infrastructure Scaling:

- Verified compatibility with next-generation standards:

- Original PCIe Gen 5 support

- Enhanced CXL memory expansion potential

- Maintained AI-optimized networking capabilities

- Verified compatibility with next-generation standards:

Detailed Performance Metrics and Benchmarks

Testing in production environments with the modified 48GB configuration demonstrates specific performance characteristics:

- AI Training Benchmarks:

- Large Language Model Performance:

- Enhanced training capability for larger parameter models

- Improved model loading efficiency with expanded memory

- Extended multi-task training capacity

- Computer Vision Tasks:

- Maintained object detection training performance

- Enhanced semantic segmentation with larger datasets

- Original video processing capabilities

- Large Language Model Performance:

- Professional Rendering Metrics:

- Real-time Rendering:

- Enhanced 8K scene rendering with larger assets

- Increased concurrent viewport rendering capacity

- Original 4K ray tracing performance

- Batch Rendering:

- Extended animation sequence capacity

- Original light baking performance

- Enhanced volumetric rendering memory handling

- Real-time Rendering:

Expert Deployment Recommendations

Based on extensive testing with the modified configuration, we recommend the following optimization strategies:

- AI Research Configurations:

- Validated Hardware Setup:

- Verified 2-GPU configurations with original NVLink

- Maintained PCIe Gen 4 x16 lanes per GPU

- Confirmed liquid cooling compatibility

- Software Stack Requirements:

- Verified CUDA 12.0 or later compatibility

- Confirmed cuDNN 8.9+ optimization support

- Tested container-based deployment scenarios

- Validated Hardware Setup:

- Render Farm Optimization:

- Verified System Architecture:

- Maintained 1:4 CPU-to-GPU core ratio

- Confirmed NVMe storage array compatibility

- Validated redundant power systems

- Network Configuration Requirements:

- Original 25GbE minimum interconnect support

- Verified storage network separation

- Enhanced load-balanced rendering distribution

- Verified System Architecture:

Conclusion

The modified 48GB RTX 4090 configuration represents a significant advancement in GPU memory capacity, building upon the strong foundation of NVIDIA’s original design. The doubled memory capacity, while maintaining core architectural features, positions this solution as a valuable option for memory-intensive computing requirements.

Organizations should carefully evaluate their specific workflow requirements, scaling plans, and infrastructure capabilities when considering this enhanced configuration. The expanded memory capacity, combined with verified compatibility with original features, makes this modified variant particularly relevant for enterprises dealing with large-scale AI models and memory-intensive professional workloads.