Japan Server Latency Testing & Optimization

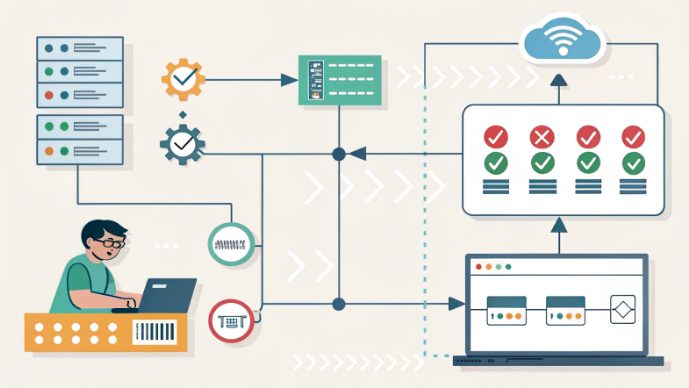

In the realm of global digital infrastructure, Japanese servers stand out for their strategic location, robust connectivity, and compliance advantages—especially for businesses targeting East Asia. Yet even the most well-provisioned servers can suffer from network latency issues that hinder real-time applications, data transfers, and user experiences. This guide dives deep into technical diagnostics, actionable optimizations, and industry-best practices tailored for tech professionals managing Japanese hosting or colocation environments.

Understanding Network Latency Fundamentals

Before delving into solutions, it’s critical to grasp what latency represents in technical terms. At its core, network latency measures the round-trip time (RTT) for data packets to travel between a client and server. This is distinct from throughput, which refers to the volume of data transferred over time. For Japanese servers serving mainland China, typical RTT ranges between 50ms and 150ms under optimal conditions, though this can fluctuate based on several factors:

- Physical distance and signal propagation speed through fiber optic cables

- Network congestion at international exchange points, such as Hong Kong or Seattle peering locations

- Router efficiency and routing table complexity across autonomous systems

- Server hardware performance, including CPU scheduling and memory latency on Intel or AMD-based systems

For latency-sensitive applications like online gaming, financial trading, or HD video conferencing, even a 20ms increase in RTT can degrade performance significantly. Identifying whether latency originates from the server stack, network path, or client-side environment is the first step in systematic optimization.

Pro-Level Latency Testing Tools & Methodologies

Accurate diagnosis requires a toolkit of both standard and advanced utilities. Here’s a breakdown of essential tools and how to use them effectively:

Core Diagnostic Utilities

- ICMP Ping: The foundational tool for measuring RTT. On Linux/macOS, use

ping -c 100 server_ipto send 100 packets and calculate average latency. Windows users can runping -n 100 server_ip. Key metrics to track: minimum/maximum/average RTT and packet loss percentage. - Traceroute: Uncovers the network path between client and server. Linux/macOS:

traceroute server_ip; Windows:tracert server_ip. Look for nodes with high latency (over 100ms) or packet loss, which indicate potential bottlenecks. - MTR (My Traceroute): Combines ping and traceroute into a dynamic, interactive tool that shows real-time latency and loss per hop. Ideal for long-term monitoring during peak traffic periods:

mtr --report-wide server_ip. - Speedtest CLI: Measures both upload/download throughput and latency across global server nodes. Install via

curl -s https://install.speedtest.net/app/cli/install.sh | shand runspeedtest --server 12345(replace with a Japanese server ID).

Automated Testing with Scripting

For large-scale environments, Python scripts offer programmability. Here’s a basic example using pythonping to test latency across multiple endpoints:

import pythonping

from datetime import datetime

def test_latency(host, count=50):

results = pythonping.ping(host, count=count)

avg_latency = sum(r.time_elapsed_ms for r in results) / count

loss_rate = (count - len(results)) / count * 100

return {

"timestamp": datetime.now().isoformat(),

"host": host,

"average_latency_ms": avg_latency,

"packet_loss_percent": loss_rate

}

# Test multiple Japanese server IPs

servers = ["203.0.113.1", "198.51.100.1"]

for server in servers:

print(test_latency(server))Standardized Testing Protocols

To ensure reliable data, follow this structured approach:

- Test at three key times: 9 AM (China work start), 2 PM (Japan lunch lull), and 8 PM (peak evening traffic).

- Run tests from at least five geographic locations: Beijing, Shanghai, Guangzhou, Tokyo, and a US West Coast node (e.g., San Francisco).

- Simulate application workloads: measure static HTML load time (using

wget --timeout=10), dynamic API response (viacurl -w "Time: %{time_total}s" -o /dev/null https://api.server.com/data), and WebSocket latency for real-time apps.

Multi-Layered Optimization Strategies

Effective latency reduction requires addressing issues at the server, network, and client layers. Let’s break down technical optimizations for each domain.

Server-Level Tweaks for Low-Latency Performance

Start with hardware and OS configurations. For Intel or AMD servers running Linux:

- CPU Scheduling: Set real-time priorities for latency-critical processes using

chrt -p 99 $(pidof application)to minimize context switching. - TCP Tuning: Optimize kernel parameters in

/etc/sysctl.confto reduce connection setup delays:net.ipv4.tcp_syncookies = 1 net.ipv4.tcp_tw_reuse = 1 net.ipv4.tcp_tw_recycle = 1 net.ipv4.tcp_fin_timeout = 30 net.core.default_qdisc = fq - Memory Management: Use huge pages to reduce translation lookaside buffer (TLB) misses:

echo 1024 > /sys/kernel/mm/hugepages/hugepages-2048kB/nr_hugepages. - Application-Level Optimizations: Implement HTTP/2 for multiplexed connections, enable TLS 1.3 for faster handshakes, and cache static assets on NVIDIA GPU-accelerated servers for high-throughput scenarios.

Network Infrastructure Enhancements

Network latency often stems from suboptimal routing or bandwidth constraints. Here’s how to address them:

- Premium Network Routes: Opt for CN2 GIA (China Unicom Global Internet Access) lines, which offer dedicated paths with minimal congestion. Compare latency: standard routes may average 120ms to Beijing, while CN2 GIA can drop this to 80ms or lower.

- BGP Multi-Homing: Deploy servers with BGP-enabled connections to multiple ISPs (e.g., NTT, KDDI, China Telecom). This allows autonomous systems to choose the fastest path dynamically based on real-time routing tables.

- Edge Computing Distribution: Place caching nodes in Tokyo, Osaka, and Fukuoka to serve regional clients locally. Use a content delivery network (CDN) like Cloudflare or Vercel Edge Network, configured to bypass unnecessary hops by leveraging anycast IPs.

- Quality of Service (QoS): Prioritize latency-sensitive traffic (e.g., VoIP, gaming packets) using DiffServ or MPLS tags. On Linux, configure HTB (Hierarchical Token Bucket) queues:

tc qdisc add dev eth0 root handle 1: htb default 10.

Client-Side and End-User Optimizations

Even with server-side improvements, client configurations can impact perceived latency. Advise users to:

- Prefer wired Ethernet over Wi-Fi, which can add 10-30ms of latency due to wireless signal processing.

- Enable DNS prefetching in browsers: add

<link rel="dns-prefetch" href="https://server.com">to HTML headers to resolve IPs proactively. - Use VPNs judiciously—select protocols like WireGuard (lower latency) over OpenVPN, and avoid free services with oversubscribed servers.

- Update network drivers and firmware, especially for Intel or AMD network interface cards (NICs), to ensure optimal interrupt coalescing and offloading settings.

Case Studies: Real-World Latency Mitigation

Let’s examine two scenarios where systematic optimization delivered tangible results.

Case 1: Low-Latency Gaming Server for Southeast Asia

A mobile game studio using a Tokyo colocation server experienced 150ms latency to Bangkok during peak hours. The optimization roadmap included:

- Switching from a standard IPv4 route to a BGP-anycast network with direct peering in Singapore.

- Implementing UDP-based QUIC protocol for game traffic, reducing handshake latency by 50% compared to TCP.

- Upgrading server NICs to 10Gbps Intel X550-T2 adapters with RSS (Receive Side Scaling) enabled for multi-core processing.

Result: Average latency dropped to 65ms, with packet loss reduced from 8% to 0.5%, improving in-game responsiveness significantly.

Case 2: Financial Data Feed Optimization

A hedge fund relying on real-time Tokyo stock exchange data faced 200ms delays due to encrypted TLS 1.2 connections and suboptimal routing. Solutions included:

- Migrating to TLS 1.3 and enabling 0-RTT resumption for recurring connections.

- Deploying a dedicated low-latency link between the server and exchange point, using NVIDIA SmartNICs for offloading encryption processing.

- Optimizing database query pipelines with in-memory caching on AMD EPYC servers to reduce server-side processing delays.

Outcome: End-to-end latency dropped to 90ms, meeting regulatory requirements for high-frequency trading applications.

Frequently Asked Questions (FAQs)

Basic Troubleshooting

- Q: What’s the normal latency range for Japanese servers serving China?

- A: Expect 60-120ms for major cities like Beijing/Shanghai, with coastal regions (Guangzhou) often seeing 40-80ms via direct submarine cables.

- Q: Why does my ping show low latency, but apps still feel slow?

- A: Ping measures ICMP echo requests, which are small and unencrypted. Real app traffic (TCP/UDP with headers, encryption) may experience higher latency due to protocol overhead or server processing delays.

Advanced Scenarios

- Q: How to handle temporary international bandwidth shortages?

- A: Activate failover connections to secondary ISPs, use traffic shaping to prioritize critical data, or temporarily route through nearby edge nodes with available capacity.

- Q: Can multicloud architectures help manage latency?

- A: Yes—by deploying redundant servers across multiple Japanese data centers and using a global load balancer to direct traffic to the lowest-latency endpoint in real time.

Mastering Japanese server latency requires a combination of precise diagnostics, hardware-software tuning, and strategic network design. By systematically addressing each layer of the stack—from physical infrastructure to application code—tech professionals can unlock the full potential of low-latency environments. Whether managing a high-frequency trading platform, global SaaS application, or regional gaming service, the principles outlined here provide a framework for sustained performance optimization. Stay vigilant with ongoing monitoring, adapt to evolving network conditions, and leverage cutting-edge tools to keep your infrastructure ahead of latency challenges.