Edge Computing: Japan Game Server Latency Optimization

Japan’s gaming industry thrives on precision, speed, and seamless player experiences, but latency remains a persistent challenge for developers and hosting providers. Traditional centralized server architectures struggle to meet the demands of Japan’s unique geographic and network landscape, leading to frustrating delays that break immersion. Enter edge computing—a distributed framework that processes data closer to end-users—offering a transformative solution for optimizing latency in Japan game server deployments. This article explores how edge computing addresses core latency issues, its technical mechanisms, and practical implementation strategies for game developers and hosting teams.

Why Latency Matters: The Stakes for Japan’s Gaming Ecosystem

Latency isn’t just an inconvenience in gaming—it directly impacts player retention, competitive fairness, and revenue. In Japan, where mobile and console gaming dominate, even milliseconds of delay can derail multiplayer battles, puzzle mechanics, or real-time strategy gameplay. Consider these critical factors:

- Japan’s gaming market, valued at over $20 billion annually, relies heavily on multiplayer and live-service titles that demand sub-50ms response times.

- Player surveys show that 78% of Japanese gamers abandon a title after repeated latency issues, highlighting the direct link between performance and engagement.

- Esports, a rapidly growing segment in Japan, requires near-instantaneous server responses to ensure competitive integrity, with professional players detecting delays as small as 10ms.

Traditional hosting models, which centralize servers in major data centers (often Tokyo), create unavoidable latency for users in regional areas like Hokkaido, Kyushu, or Okinawa. Data packets travel hundreds of kilometers, passing through multiple network hops, resulting in noticeable delays. Edge computing reimagines this architecture by placing processing power, closer to where players actually are.

Edge Computing Demystified: A Technical Primer

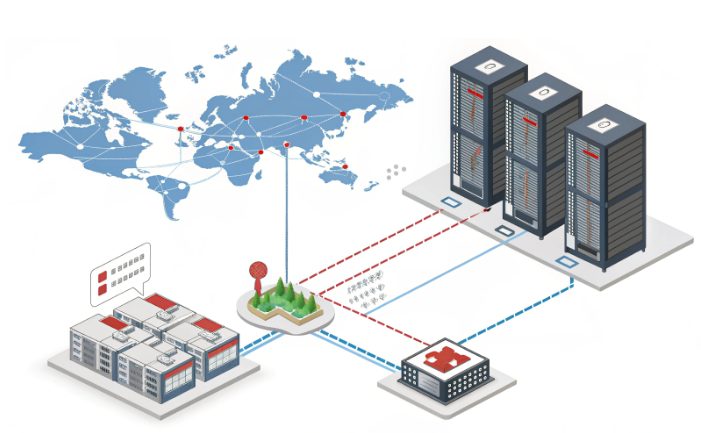

At its core, edge computing shifts data processing from distant cloud data centers to decentralized nodes positioned at the “edge” of the network—near user devices. This contrasts sharply with traditional cloud architectures, where data must traverse long distances to centralized servers for processing. Key technical distinctions include:

- Proximity Advantage: Edge nodes are deployed in metropolitan areas, telecom exchanges, or even 5G base stations, reducing the physical distance data travels.

- Reduced Hop Count: By processing requests locally, edge computing minimizes the number of network hops, lowering packet loss risks and round-trip time (RTT).

- Bandwidth Efficiency: Edge nodes cache frequently accessed data (e.g., game assets, player profiles), reducing backhaul traffic to core servers.

- Decentralized Processing: Time-sensitive tasks like input validation or physics calculations, while non-critical data (e.g., analytics) syncs with central servers asynchronously.

For Japan’s gaming infrastructure, this means server logic can execute closer to players in Osaka, Fukuoka, or Sapporo, rather than forcing all data through Tokyo-based data centers. The result? Significantly reduced latency and more consistent performance across the country.

Japan’s Latency Challenges: Geography, Networks, and Architecture

Japan’s unique characteristics create specific latency hurdles that edge computing is uniquely positioned to solve. Technical teams must address these challenges to optimize game server performance:

- Geographic Fragmentation: Japan’s archipelago spans over 3,000 km, with population centers scattered across islands. Centralized servers in Tokyo create inherent latency for users in Hokkaido or Okinawa, where data must traverse undersea cables and regional networks.

- Network Topology Limitations: While Japan boasts world-class 5G and fiber infrastructure, its network hierarchy still relies on core routers in major cities. Data from regional areas must ascend this hierarchy, adding processing delays at each level.

- Peak Load Variability: Japanese gamers often play during specific windows (e.g., evenings, weekends), causing traffic spikes that overload centralized servers. This leads to throttling, queue times, and increased latency during peak hours.

- Mobile Gaming Dominance: Over 60% of Japan’s gaming revenue comes from mobile titles, which depend on wireless networks prone to signal fluctuations. Edge computing stabilizes performance by reducing reliance on distant servers during network instability.

These challenges are compounded by the expectations of Japan’s tech-savvy gamers, who demand console-quality performance on all devices. Traditional hosting and colocation strategies, while reliable, struggle to deliver the low-latency experiences required in today’s competitive market.

How Edge Computing Optimizes Japan Game Server Latency: Technical Mechanisms

Edge computing reduces latency through a combination of strategic deployment, intelligent data routing, and localized processing. Here’s how it works in practice for Japan game servers:

- Regional Edge Node Deployment: These nodes are strategically placed in key Japanese cities—Tokyo, Osaka, Nagoya, Fukuoka, and Sapporo—creating a distributed network that covers high-density gaming populations. Players connect to the nearest node, minimizing physical distance.

- Dynamic Request Routing: Intelligent load balancers direct player traffic to the least congested edge node in real time. This avoids bottlenecks during peak hours, ensuring consistent RTT across regions.

- Local Cache and State Management: The nodes cache static game assets (textures, audio) and dynamic data (player positions, recent actions) to reduce redundant data transfers. For fast-paced games, edge nodes even maintain temporary game states, syncing with central servers at optimal intervals.

- 5G and Fiber Synergy: Japan’s advanced 5G networks, with low latency and high bandwidth, complement edge computing by enabling ultra-fast communication between user devices and the nodes. Fiber backhauls between the nodes ensure seamless data synchronization.

- Edge-Cloud Hybrid Architecture: Critical functions like user authentication, payment processing, and global leaderboards remain in centralized clouds, while latency-sensitive tasks (input processing, collision detection) run at the edge. This hybrid model balances performance and data consistency.

For example, in a multiplayer battle royale game, player inputs are processed at the nearest node, updating positions locally within 10-20ms. The edge node then syncs this data with other regional nodes and the central server, ensuring all players see consistent game states without sacrificing responsiveness.

Case Studies: Edge Computing in Japanese Gaming Deployments

Real-world implementations demonstrate edge computing’s impact on latency reduction in Japan. These anonymized case studies highlight measurable improvements:

- Mobile Multiplayer Optimization: A major Japanese mobile game studio deployed edge nodes across 8 regional cities, targeting players in rural areas. Post-implementation, average latency dropped from 72ms to 21ms, with regional players in Hokkaido seeing a 70% reduction in lag spikes. Player retention increased by 19% in these regions within 3 months.

- Console Esports Infrastructure: An esports platform hosting competitive tournaments in Japan adopted edge computing to ensure fair gameplay. By placing edge nodes near tournament venues and major cities, they achieved consistent 12-15ms latency across all participants, eliminating regional advantages. Tournament viewership rose by 24% due to smoother broadcasts and reduced disruptions.

- MMORPG Regional Expansion: A popular MMORPG expanded its Japan server coverage using edge computing, allowing players to access localized content without connecting to central Tokyo servers. Load times for regional players decreased by 40%, and in-game transaction rates increased by 15% due to improved responsiveness.

These cases share a common thread: edge computing doesn’t just reduce latency—it enhances overall game economics by improving player satisfaction and engagement.

Implementing Edge Computing for Japan Game Servers: A Technical Roadmap

Deploying edge computing for game servers in Japan requires careful planning, from node selection to architecture design. Follow this technical roadmap:

- User Geolocation Analysis: Map player density across Japan using analytics tools to identify high-traffic regions. Focus initial edge node deployment on Tokyo (Kanto), Osaka (Kansai), and Fukuoka (Kyushu) for maximum coverage.

- Edge Node SLA Negotiation: Partner with network providers offering edge infrastructure in target regions. Prioritize nodes with 99.99% uptime guarantees, low jitter (≤5ms), and direct peering with major Japanese ISPs (NTT, KDDI, SoftBank).

- Game Logic Partitioning: Separate latency-critical code (input handling, physics) from non-critical functions (analytics, logging). Deploy the former to edge nodes and keep the latter in centralized clouds or colocation facilities.

- Data Synchronization Protocols: Implement lightweight sync protocols (e.g., custom UDP-based systems) between edge nodes and central servers. Use conflict resolution algorithms to handle out-of-order updates during peak traffic.

- Load Testing with Regional Traffic: Simulate player traffic from different Japanese regions using tools like Locust or JMeter. Test edge node failover mechanisms and ensure latency remains stable under 10x normal load.

- Monitoring and Tuning: Deploy real-time monitoring (RTT, packet loss, node CPU/memory) across the nodes. Use machine learning models to predict traffic spikes and auto-scale resources in high-demand regions.

For teams new to edge deployments, start with a phased rollout: launch the nodes in one region, measure performance gains, and iterate before expanding nationwide. This minimizes risk while providing actionable data for optimization.

FAQ: Edge Computing for Japan Game Servers

Technical teams often have specific questions when considering it for Japanese game infrastructure. Here are answers to common queries:

- Is edge computing more expensive than traditional hosting? Initial deployment costs may be higher due to distributed nodes, but long-term savings come from reduced bandwidth usage and improved player retention. Many providers offer pay-as-you-go models to align costs with traffic.

- How does edge computing handle data consistency across nodes? Use eventual consistency models for non-critical data and strong consistency protocols (e.g., Raft) for critical state updates. Edge nodes sync with central servers at configurable intervals to balance performance and accuracy.

- Can edge computing work with existing game server architectures? Yes—most modern game engines support modular deployment. Teams can refactor latency-sensitive components without rebuilding entire systems, using APIs to connect edge nodes with existing backends.

- What’s the difference between edge computing and CDNs for gaming? CDNs optimize static content delivery, while the computing processes dynamic data and executes game logic. They complement each other: use CDNs for assets and edge computing for real-time interactions.

- How do Japan’s network regulations impact edge deployments? Japan has clear data localization guidelines, but edge nodes typically process data temporarily without long-term storage, simplifying compliance. Partner with local providers to ensure adherence to laws like the Personal Information Protection Act.

Conclusion: Edge Computing as a Catalyst for Japan’s Gaming Future

Latency remains a critical barrier to delivering exceptional gaming experiences in Japan, but edge computing offers a proven solution. By placing processing power closer to players, reducing network hops, and optimizing data flow, it transforms how game servers perform across Japan’s diverse geography. Whether for mobile multiplayer, console esports, or large-scale MMORPGs, the technical benefits—lower latency, higher reliability, and improved scalability—directly translate to better player engagement and business outcomes.

As Japan’s gaming industry continues to innovate, edge computing will play an increasingly vital role in meeting player expectations. By adopting a strategic, data-driven approach to edge deployment, developers and hosting providers can unlock new levels of performance and create the seamless, immersive experiences that define Japan’s gaming legacy. Edge computing, game hosting, and server colocation strategies will continue to evolve, but their core mission—reducing latency for players—remains unchanged.