Building a Cross-Domain GPU Cluster Between China and Japan

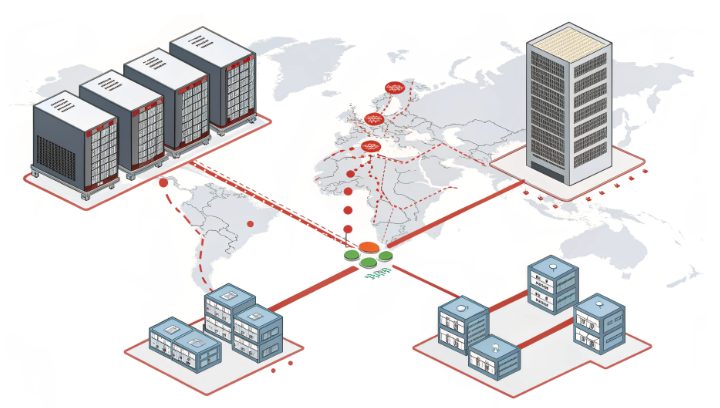

In the era of exponential growth in computational demands—especially for AI training, scientific simulations, and data-intensive workloads—creating a cross-domain GPU cluster spanning China and Japan has emerged as a strategic solution. This architecture leverages geographical proximity (low-latency connections between regional data hubs) and distributed computing to overcome single-region limitations. In this guide, we’ll explore the technical nuances of designing, deploying, and optimizing such a cluster, tailored for professionals seeking to harness cross-border computational synergy.

The Rationale for Cross-Domain GPU Clustering

Regional computing ecosystems often have complementary strengths. Japanese data centers are known for network stability, low-latency links to East Asia, and compliance with strict data protection regulations. Chinese infrastructure, by contrast, offers scale, diverse hardware options, and robust local network integration. Combining these into a unified GPU cluster enables:

- Resource pooling for compute-heavy tasks like large-scale neural network training

- Geographic redundancy to ensure high availability during regional outages

- Compliance with regional data residency rules through strategic data placement

The core challenge lies in building a seamless infrastructure that balances performance, security, and regulatory adherence. Let’s break down the essential components.

Architectural Foundations: Designing the Cluster Blueprint

Effective cluster design starts with clear requirements analysis across three critical dimensions:

1. Defining Computational Requirements

Begin by modeling your workload’s unique characteristics:

- GPU Selection: Determine if your tasks need high-throughput GPUs for parallel processing or low-latency units for real-time inference. Key factors include memory bandwidth, compute power, and compatibility with existing software stacks.

- Node Scale Planning: Estimate node counts by analyzing parallelization potential. Frameworks like Horovod or PyTorch’s Distributed Data Parallel (DDP) scale across hundreds of GPUs, but network topology becomes critical as node numbers grow.

- Latency & Bandwidth Needs: Latency-sensitive applications (e.g., financial trading) require sub-100ms round-trip times, demanding dedicated low-latency links. Bandwidth-heavy tasks (e.g., large dataset transfers) need multi-Gbps cross-region connections.

2. Network Architecture: Bridging Regions Effectively

Cross-domain network design must balance cost, performance, and flexibility:

- Connection Solution Comparison:

- Dedicated Fiber Links: Offer minimal latency (50-80ms between Tokyo and Shanghai) and high reliability but carry significant deployment costs.

- SD-WAN Solutions: Provide dynamic routing and cost efficiency, ideal for scalable environments where absolute minimum latency is non-critical.

- VPN Tunnels: Ensure basic security for non-sensitive traffic but introduce overhead that degrades GPU-to-GPU communication.

- Load Balancing Strategies: Implement region-aware routing to distribute workloads based on real-time resource use. A dual-active architecture (nodes in both regions) ensures failover resilience while optimizing data locality.

- QoS Guarantees: Prioritize cluster management traffic (e.g., scheduler communications) over best-effort flows using DiffServ or IntServ models to avoid bottlenecks.

3. Software Stack: Orchestrating Distributed Resources

Choose tools optimized for cross-domain environments:

- Cluster Management Systems:

- Slurm: Ideal for high-performance computing (HPC) workloads, with mature multi-site resource allocation support.

- Kubernetes: Suited for containerized applications, offering native support for distributed microservices.

- Distributed Computing Frameworks: Optimize for inter-region communication. Use model parallelism (splitting models across regions) or data parallelism (distributing data shards) based on your workload’s scalability.

- Storage Solutions: Deploy distributed file systems (e.g., Ceph, NFS) with regional caching to minimize cross-border transfers. Local SSDs in high-I/O nodes buffer frequently accessed datasets.

Deployment Pipeline: From Setup to Initialization

Execute deployment in structured phases to ensure consistency across regions.

1. Environment Preparation

- Base Image Configuration: Create standardized OS images with pre-installed GPU drivers (e.g., CUDA toolchain) and regional settings (locale, time zones) to speed up node provisioning.

- Hardware Compatibility Testing: Validate GPU-model compatibility with cluster tools and sync firmware versions across nodes to avoid driver mismatches.

- Compliance Initialization: Install security tools (firewalls, intrusion detection systems) and configure encryption (at rest/in transit) to meet Chinese and Japanese regulations.

2. Cluster Bootstrapping

Establish inter-node communication and resource discovery:

- Service Registration & Discovery: Deploy a distributed registry (e.g., Consul, etcd) to let nodes locate each other across regions, even in dynamic networks.

- Shared Storage Setup: Mount distributed file systems across all nodes for consistent dataset access. Use read-only caches in remote regions to reduce latency.

- Network Initialization Scripts: Run automated scripts to configure interfaces, set up RDMA (if supported), and apply QoS policies during node startup.

3. Software Stack Deployment

Use infrastructure-as-code tools for reproducible deployments:

- Automation Tool Selection: Choose Ansible (agentless configuration) or SaltStack (high-speed parallel execution) based on cluster size and complexity.

- Containerization Best Practices: Package apps/dependencies into lightweight containers (Docker, Singularity) with layered images to minimize cross-region download times. Use private registries for secure artifact distribution.

- Distributed Service Orchestration: Define manifests that specify resource allocations (CPU, GPU, memory) per region, letting schedulers prioritize local resources.

Performance Optimization: Fine-Tuning for Cross-Domain Synergy

Optimize across four key dimensions to maximize cluster efficiency.

1. Network Latency Mitigation

- RDMA Implementation: Deploy Remote Direct Memory Access (where supported) to bypass the TCP/IP stack, reducing data transfer overhead by up to 40% for inter-GPU communication.

- TCP Parameter Tuning: Configure congestion control algorithms (e.g., BBR, Cubic) based on measured round-trip times and available bandwidth.

- Data Localization: Preprocess and stage datasets in regional storage to minimize cross-border transfers. Use delta updates for incremental synchronization.

2. GPU Resource Management

Ensure fair, efficient utilization across heterogeneous nodes:

- Dynamic Resource Scheduling: Implement priority-based queuing for time-sensitive jobs, reserving GPU portions in each region for high-priority workloads.

- Multi-Tenant Isolation: Use tools like NVIDIA Multi-Process Service (MPS) to partition GPU resources among users/apps without performance loss.

- Real-Time Monitoring & Auto-Scaling: Integrate Prometheus/Grafana to track GPU utilization, memory use, and queue lengths. Trigger auto-scaling to add/remove nodes as demand changes.

3. Storage I/O Enhancement

- Hierarchical Storage Architecture: Combine local SSDs (scratch data), regional NAS (hot datasets), and cloud storage (cold archives). Use tiering tools to automate data migrations.

- Caching Strategies: Deploy in-memory caches (e.g., Redis) near compute nodes to serve frequent metadata, reducing file-operation latency.

- Asynchronous Data Replication: Use bidirectional replication for critical datasets to ensure consistency while minimizing impact on primary I/O.

4. Software-Level Optimizations

Adapt algorithms/frameworks to distributed architectures:

- Communication Efficiency: In distributed training, reduce inter-region traffic with gradient aggregation (e.g., fp16 compression, gradient clipping) to minimize payload sizes.

- Mixed-Precision Training: Leverage GPU tensor cores for mixed-precision arithmetic, accelerating computation and cross-region data transfer.

- Failure Recovery: Implement regional checkpointing, saving intermediate states to local storage before syncing with remote nodes to cut recovery time during network partitions.

Monitoring and Resilience: Ensuring Continuous Operation

Build a robust system to detect issues and maintain uptime.

1. Distributed Monitoring Architecture

- Unified Monitoring Platform: Deploy a multi-region Prometheus cluster with Grafana dashboards, aggregating metrics from Chinese and Japanese nodes. Track key indicators:

- GPU utilization (per node/region)

- Cross-domain network throughput/latency

- Job queue lengths/processing times

- Log Management: Centralize logs with the ELK Stack (Elasticsearch, Logstash, Kibana), using regional shards for low-latency troubleshooting.

2. Disaster Recovery Strategies

- Failover Mechanisms: Designate standby nodes in each region to assume workloads within minutes of failure. Use heartbeat monitoring to detect outages and trigger auto-failover.

- Data Backup Policies: Implement asynchronous backups to remote storage, with point-in-time snapshots for critical datasets. Test recovery procedures regularly to meet RTO/RPO requirements.

- Network Redundancy: Deploy multiple connectivity options (e.g., primary fiber + SD-WAN backup) to eliminate single points of failure in cross-domain communication.

Navigating Regulatory and Compliance Landscapes

Adherence to data protection laws is non-negotiable for cross-border setups:

- Chinese Regulatory Compliance: Follow the Data Security Law and Personal Information Protection Law by defining data classification policies, obtaining cross-border transfer consent, and conducting regular audits.

- Japanese Regulatory Alignment: Meet the Act on the Protection of Personal Information (APPI) via strict access controls, data anonymization (for sensitive sets), and incident notifications to authorities.

- Cross-Border Data Flows: Complete security assessments for data transfers, maintaining documentation of flows and storage locations to satisfy regulatory audits.

Future-Proofing Your Cluster: Emerging Trends

Anticipate technological shifts shaping cross-domain computing:

- Heterogeneous Computing Integration: Prepare for hybrid CPU-GPU-TPU architectures, where specialized accelerators handle different workload phases—requiring dynamic cross-region resource orchestration.

- Edge Collaborative Computing: Integrate edge nodes in both countries for low-latency preprocessing, feeding refined data to the central cluster for complex computations.

- Green Computing Initiatives: Leverage Japanese data centers’ renewable energy adoption (e.g., hydrogen power) to reduce the cluster’s carbon footprint, aligning with global sustainability goals.

Building a cross-domain GPU cluster between China and Japan is complex but rewarding, demanding expertise in distributed systems, network engineering, and regulatory compliance. By focusing on architectural flexibility, performance optimization, and legal adherence, organizations can create a robust infrastructure that meets current demands while adapting to future challenges. As AI and data-intensive technologies evolve, such cross-border setups will become essential for unlocking global computational potential.

Whether scaling AI training, enhancing simulation capabilities, or building resilient data pipelines, the principles here provide a foundation for a high-performance, compliant, future-ready cluster. Start with rigorous requirements analysis, prioritize low-latency connectivity, and adopt tools for seamless cross-region orchestration—your journey to distributed computational excellence begins with strategic design and meticulous execution.